AI: The New Cybersecurity Attack Surface

IIS Executive Insights Cyber Expert: David Piesse, CRO, Cymar

Click here to read David Piesse's bio

View More Articles Like This >

Definitions

Agent—Software that performs tasks as part of the workforce.

AI factory—Data centre that converts language to mathematical tokens to process AI.

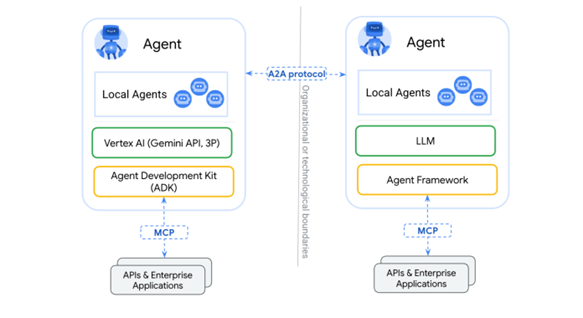

Agent-to-agent protocol (A2A)—Agents that communicate with each other across networks.

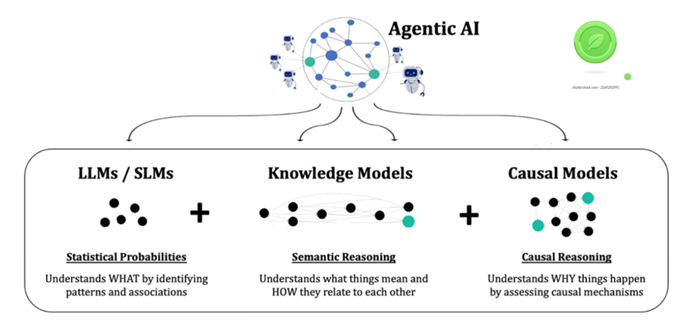

Agentic AI—Autonomous workflow management by software agents.

AI tokens—The breakdown of language into words and sub-words that are split into blockchain tokens.

API endpoint – Application Programming Interface. A web address path where a user can perform an operation on the server.

Credential stuffing—An automatic cyberattack that steals accounts and log-in credentials.

Dark Web—The hidden part of the internet known for illegal transactions and sales.

Data poisoning—Adversarial cyberattack that injects malicious content into the LLM.

FIDO (fast identity online) passkey—Sign-on capability that doesn’t use a password.

Generative AI (GenAI) - branch of AI creating original content based on linear regression.

Infostealer—Malicious software designed to steal sensitive information.

Jailbreaking—Removing the safety limitations and filters from the LLM.

Knowledge Graph – representation of data relationships to be used as a training model.

Large language model (LLM) —deep learning model trained on vast amounts of data.

LLMjacking—Using stolen cloud credentials to gain access to cloud-based LLMs.

Metadata – data about data. Part of the structure of data that identifies location.

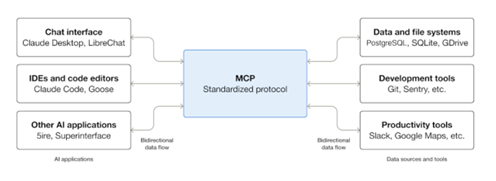

Model context protocol (MCP) —Open-source standard for agents to access external data.

Phishing—Cyberattack where criminals impersonate users or agents to trick people into providing sensitive information.

Prompt injection attack—A cyberattack that targets AI prompts and injects malicious instructions either directly or indirectly.

Shadow AI—Data created, stored, or shared without IT approval.

Shadow leak—A server-side data leak using indirect prompt injection.

Social engineering—Phishing.

Tool poisoning—Malicious instructions embedded in MCP tools.

User interface (UI)—The point where humans and machines interact.

Zero click vulnerability—A cyberattack exploit that happens without user interaction.

Zero-day attack—An unknown cyber vulnerability that has not been patched or fixed.

Overview

The confluence of agentic AI and cyberspace is changing the internet as we know it today. Two standards driving change are the model context protocol[i] and agent-to-agent protocol.[ii] These protocols give software agents the freedom to access external systems and to communicate seamlessly across diverse platforms and networks. While such access improves productivity, it’s also where new attack surfaces for bad actors emerge, as every document, email, and webpage can become a potential attack vector.

Agentic AI is transforming into a default operating system feature and is introducing a cyber baseline risk called prompt injection. The Open Worldwide Application Security Project (OWASP)[iii] ranked prompt injection as the top AI vulnerability risk that can manipulate LLMs through adversarial inputs by social engineering (agent phishing) or jailbreaking.

Security is vital for protecting the digital world. Traditional cybersecurity risks include malware, phishing, denial-of-service attacks, ransomware, and structured query language (SQL) injection. Detection of and response to these risks are based on preestablished security rules, which react to zero-day attacks, unknown threats but can be bypassed by AI threats.

AI security uses machine learning and data analysis to learn from past attacks, detect threats, and dynamically adapt to new ones. Such security presents a dual challenge for cyber professionals: empowering defenders with advanced threat detection and response while equipping attackers with intelligent tools such as malware and deepfakes.

These challenges reshape the risk landscape, forcing cyber insurers and risk managers to re-evaluate policy coverage, insurability, and underwriting practices, and to adapt to evolving government regulations and amplification of liability, as evidenced by real-world incidents. The importance of human judgment alongside AI is paramount as language and words are the new attack weapons, providing an extensive attack surface requiring constant detection.

When AI is introduced to enterprises, a digital brain makes decisions, and like a person, it can be vulnerable and manipulated. Malicious code can steal private keys, identities, and data and package it for sale on the dark web. Open-source models contain many agents that run code and install other tools, increasing vulnerability.

AI cyberattacks cost organizations billions of dollars annually, with future losses estimated in the trillions. Agentic AI exacerbates financial risks by scaling sophisticated attacks, automating fraud, and creating advanced social engineering tactics.

Annual global cybercrime damages are projected to reach $10.5 trillion this year and increase to $15.6 trillion by 2029.[iv] Deloitte predicts that GenAI could drive fraud losses in the United States to $40 billion by 2027, increasing from $12.3 billion in 2023.[v] Gartner also predicts that 40 percent of agentic AI projects will be cancelled by 2027[vi] due to fraud costs. The financial cost of deepfake fraud per attack averages between $450,000 to $600,000, while 80 percent of organizations lack protocols for handling deepfake attacks.[vii]

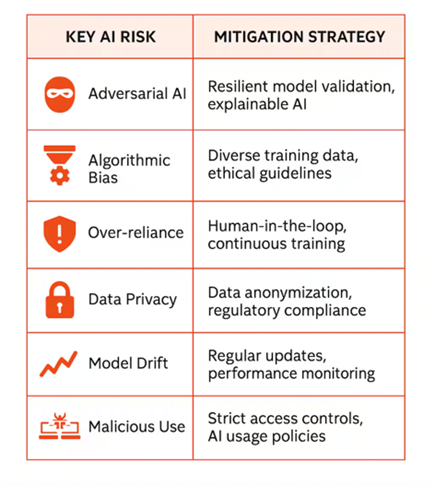

Adversarial AI

The diagram shows six major types of AI-driven cybersecurity risks—all of which are difficult to detect and require AI-powered defence, as they bypass traditional rule-based systems.

Source: Author compilation from the web.

Prompt injection involves an attacker manipulating an LLM by inserting malicious instructions into a prompt. This causes the AI machine learning and the LLM to behave in unintended ways, such as leaking sensitive data or executing unauthorized commands, bypassing safety filters.

The LLM can’t distinguish between legitimate system instructions and user-provided text. LLMs are designed to follow trusted instructions, but they can be manipulated into carrying out unintended responses through carefully crafted inputs. This injection can be entered directly into the prompt. LLMs with web-browsing capabilities can be targeted by indirect prompt injection, where adversarial prompts are embedded within website content.

When the LLM retrieves and processes the webpage, it interprets and executes the embedded instructions as legitimate commands. Prompt injection exploits the fact that both the developer's instructions (system prompt) and the user's input are processed as natural language text, allowing malicious text to override original commands. Unlike traditional cyberattacks, prompt injection can be performed by anyone who can craft credible natural language. The risk and impact are serious such as incorrect or biased outputs that can affect decision-making and unauthorized access across connected systems.

Source: Radware [ix]

Data poisoning is an attack that involves malicious data being deliberately injected into a machine learning model's training dataset to corrupt behaviour. Unlike prompt injection, which manipulates live models with crafted inputs, data poisoning targets the model's foundational training data, leading to compromised accuracy and reliability in future outputs. By introducing new data or changing existing data, even the smallest factual error can create bad outcomes.

Mislabeling data, such as poisoning medical imaging datasets by intentionally tagging cancerous tumours as benign, could lead to delayed or improper patient treatments. In finance, fraudulent transactions in a model's training data can be labelled as legitimate, causing fraud patterns to be ignored. Tools used by agentic AI with the MCP can also be poisoned as attack vectors.

Source: Sharad Gupta [x]

Infection involves an AI system being infected with malware through trojan horses or back doors, emanating from the software supply chain. Many LLMs are downloaded, so they could be infected to provide inaccurate results. AI malware adapts its behaviour, changes its code, and evades detection in real time, making it more resilient than traditional malware.

In an evasion attack, AI inputs are modified to generate undesirable results. Self-driving cars, for example, can be manipulated to misread stop signs. Pictures can be altered with an unnoticeable layer of noise, causing the AI to identify it as a different image. Evasion attacks can also add subtle noise to audio file waveforms to fool speech recognition systems.

Extraction involves attackers stealing the valuable intellectual property (IP) that is used to train and tune the models. They do this by entering extensive queries (model inversion) into the system, lifting data, and slowly stealing the whole AI model.

An AI denial-of-service (DoS) attack occurs when attackers overwhelm an AI system with excessive requests and the system then denies access to legitimate users. A DoS attack is a catastrophic-level risk when a large percentage of the workforce consists of AI software agents because the attack cripples their core operations. AI systems analyse patterns in the network to determine the best time to strike, such as peak business hours.

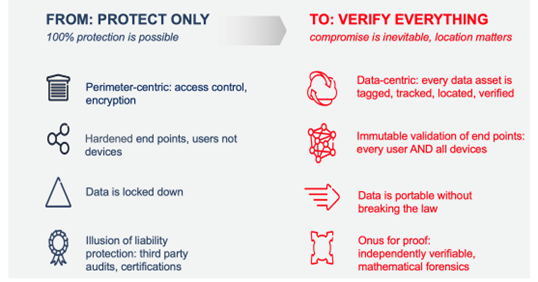

Identity Theft

Cybersecurity focuses on confidentiality (encryption), integrity, and availability.[xi] Integrity ensures that sensitive data is accessible only to authorized people and that it has not been tampered. IT security historically focused on confidentiality and availability, but many past attacks were integrity attacks. In the AI era, integrity attacks can’t be ignored, especially those involving credentials and identity theft.

Source: Guardtime[xii]

AI extends the integrity threat to identity theft through improper use of a valid account to gain credentials. These infostealer[xiii] attacks use malware in apps or emails to steal credentials. Defenders must monitor dark web forums to look for stolen AI packages. Software agents that don’t have restrictions can write malware unchecked.

Identity is the new perimeter, and it's easier to log in than to hack in. Preregistering mobile phones and using biometrics reduce the risk of bad actors logging in and replacing passwords. FIDO passkeys[xiv] are also an important security option.

Automated credential stuffing tools allow attackers to conduct AI phishing through a prompt, bypassing multifactor authentication. An ecosystem and supply chain exist around credential theft: Malware collects, verifies, and decrypts accounts, and then account traders quickly post the identities for sale in dark web marketplaces, within a short space of time.

Many of the credential stuffing scripts explicitly target API endpoints, focusing on data exfiltration. with older versions of APIs being particularly vulnerable. API business logic attacks exploit legitimate workflows to gain unauthorized access without triggering security alarms, so advanced detection methods are required.

AI agents have underlying risks in their identities, more than those of human and machine identities, with gaps in governance and unintended actions. According to a Sailpoint report, 82 percent of companies now use AI agents,[xv] and many access sensitive data. In addition, 80 percent of organizations have experienced unintended actions from their AI agents, including inappropriate data sharing and unauthorized system access that revealed credentials. This has led technology professionals to identify AI agents as a growing cybersecurity threat.

AI agents require multiple identities to access data, applications, and systems. This requires broad privileges that are hard to govern. AI agents tend to be goal-based—that is, a task is given and the agent must find the information and resources to satisfy that request, running the risk of accessing unauthorized systems or sensitive data in the process. AI agents can also access the internet, introducing unverified data into their outputs.

Executives, compliance and legal teams can be unaware of the data AI agents access. Governance of AI agents begins with knowing the data they access and sharing that information with those responsible for compliance and data protection. Lack of visibility into data access has resulted in difficulties tracking and auditing data used or shared by AI agents.

AI Tokenization

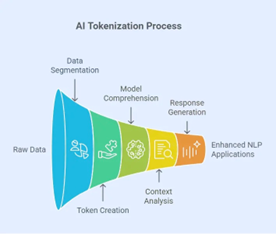

Tokenization is a foundational process that enables AI to process and secure data for various applications. It significantly strengthens the security and management of systems.

In natural language processing (NLP), tokenization is a pre-processing step where raw text is broken down into smaller units (words, subwords, or characters) called tokens. AI models process tokens to learn the relationships between them. The faster tokens can be processed, the quicker models can learn and respond. AI factory data centres convert these natural language tokens (human language) into a numerical format that machine learning models can understand and interpret efficiently.[xvi]

Tokens are essential in the AI process. They help with real-time fraud detection by analysing patterns. They also enable data sharing, as tokenization allows AI models to train on large, sensitive datasets without directly accessing the raw, private information.

AI can implement and adjust granular access controls based on user behaviour, context, and risk assessment so that only authorized individuals or AI agents can detokenize and access sensitive data. AI models themselves, along with their underlying data and algorithms, can be tokenized on a blockchain to create secure, traceable, and monetizable digital assets, ensuring transparent ownership, provenance, and usage rights in a decentralized intelligence economy.

Source: Cocolevio [xvii]

Understanding AI tokenization is important. It’s shaping how we handle and protect information, and it’s critical in transforming raw language into a machine-readable format. Tokenization is a cornerstone of LLMs, enabling them to understand, translate, generate, and analyze text across various applications and languages. AI tokenization is critical to unlocking the potential of language, and tokens are the building blocks of AI.

An adversary attack known as TokenBreak[xviii] was discovered in 2025 by Hidden Layer.[xix] TokenBreak targets tokens and can bypass safety filters. It opens models to prompt injection attacks, but it can be mitigated by proper token standards.

Source: Hidden Layer

MCP Protocol and Internet Standards

MCP is an open protocol that standardizes how applications provide context to LLMs. It consists of applications, clients, and servers.

Source: GITHUB [xx]

Network traffic originating from machines and chatbots has reached 50 percent of internet traffic,[xxi] and that rate will only increase with the use of personal assistants like Microsoft Copilot GPT[xxii] and agentic AI software agents. The internet is built on standards, and new standards have emerged in the AI space. These include MCP and A2A protocols, plus others that allow software agents to interact with other agents, networks, and external systems.

MCP, which was introduced by Anthropic at the end of 2024 [xxiii], allows AI agents to connect with servers and tools. It can also function in a cloud environment. Human language prompts initiate interactions within the MCP.

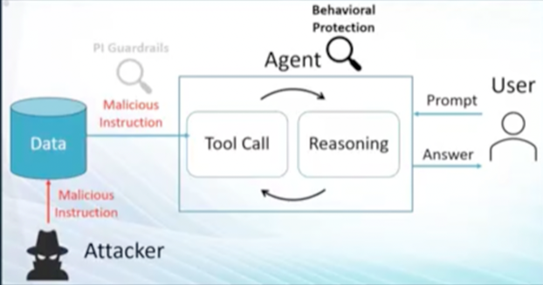

The MCP community’s thousands of servers create an ecosystem that drives the capability of AI agents and LLMs beyond a chat interface to agentic AI. The risk here is that AI agents access data by chaining together reasoning tools in a continuous loop to complete the task. If an agent is tasked with condensing email content, for example, it fetches the email, reads it, accesses a web context tool, and then synthesizes the results into a summary.

This journey could involve accessing tools such as Open AI Deep Research[xxiv] and reading emails from Gmail. These steps occur on the server side, so the agent is an autonomous process in the cloud, having access to both user data and the internet. The instructions are in natural language, but malicious instructions can be hidden inside the data in the email, which looks normal to the user. This is indirect prompt injection targeting the lack of separation between data and instruction. The attacker can mix untrusted data and instructions together and cause the agent to follow its command instead of the user's prompt.

In a direct prompt injection attack, the attacker uses a genAI prompt interface and natural language to trick the model into aberrant actions (jailbreaking) and then scales up when successful. A zero-click indirect prompt injection vulnerability was discovered in the ChatGPT deep research agent and disclosed to OpenAI in 2024. [xxv] Attackers used a white font on a white background to conceal malicious comments in the metadata, so the agent read the raw document and interpreted that text. Sensitive data was then exfiltrated as the human’s AI assistant was tricked into clicking on the malicious link.

This zero‑click indirect prompt injection vulnerability became known as ShadowLeak.[xxvi] Its occurs when OpenAI’s ChatGPT connects to enterprise Gmail and browses the web. The attack leaves no forensic evidence, and the organization never sees information leaving its network because its open AI infrastructure leaks private data that the AI assistant is reading.

The EchoLeak[xxvii] and Agent Flayer[xxviii] attacks tricked the agent into rendering an infected image in the user interface of the client’s chat interface to exfiltrate information.

Protection guardrails include scanning text that goes into the model for malicious instructions and flagging them. This protection involves observing agent actions to ensure they’re aligned with user intent. MCP server responses can be written by anyone in the open-source community. LLMs that the AI uses are naïve and trusting, so the data must be sanitized to eliminate untrusted data. An agent protection service that continuously observes the agent must also be used for better protection. The A2A protocol by Google enables AI agents to interact and collaborate in conjunction with the MCP. The key cybersecurity issues are identity protection and avoiding data leakage.

Source: Linux Foundation

Mitigating Adversarial AI

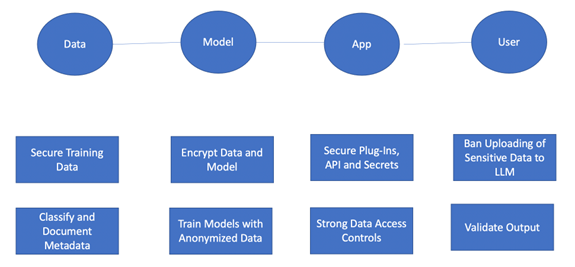

Explainable AI[xxix] establishes trust and legal compliance to minimise risk and achieve better outcomes. Overreliance on AI automation without human oversight or expertise creates new vulnerabilities such as security teams relying on AI to mitigate threats that the AI has not been trained to find. Yet the exponential growth of AI technology is outpacing the development of regulatory frameworks, data protection laws, and industry-specific regulations. Best practices for threat mitigation are shown in the diagram below.

Source: Author based on Thales [xxx] Best Practices and Guidelines

It’s key to harmonize data to make it AI ready and to separate external data from system instructions to prevent it from being interpreted as a prompt. It’s important to monitor outputs for signs of a successful injection attack, which could include unexpected behaviour and the disclosure of sensitive information. The National Institute of Standards and Technology (NIST) describes key adversarial mitigation tactics.[xxxi]

Comprehensive cybersecurity frameworks ensure that AI systems are secure. They must, however, cover the AI model’s entire life cycle. Deployed AI models require continuous monitoring to ensure ongoing effectiveness and to detect any signs of compromise or model drift—the degradation of a machine learning model’s performance over time due to changes in real-world data. Training data can become outdated, leading to inaccurate predictions, so mitigating model drift requires retraining the model with fresh data.

Preventing Prompt Injection

The application prompt layer in AI identifies activity across enterprises, such as agentic agents, human users, AI runtime workloads, and AI factories. Shadow AI activities must be identified because workforces that use AI tools without IT authorization can cause sensitive data leaks into third-party systems. To effectively secure workforce use of AI against prompt injection attacks, access policies must be enforced across all assets. The user interface is a prime attack surface when securing LLM applications/workloads, and many enterprises lack governance controls to achieve unified security solutions that span workforce and workload.

Data leak detection capabilities look for evidence of leaks that traverse prompts and agentic AI access activity with granular response capabilities to generate event-based alerts, prevent access, and remove malicious content. Prompt injection attacks can occur in any language. To prevent them, organizations must be able to detect malicious URLs or files traversing a system, prevent machine code input, and ensure governance policy is actioned at scale with runtime security for agents. They must also limit shadow IT activity, which can be accomplished by blocking access to Deepseek and the commercial version of ChatGPT and by directing the workforce to use the organization’s own knowledge base.

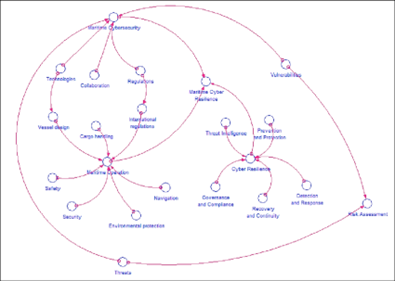

Causal Reasoning and AI Cybersecurity (Causal AI)

Causal AI is a branch of AI known as computational linguistics.[xxxii] Causality in cybersecurity refers to the study of cause-and-effect relationships to understand, predict, and defend against cyber threats. It moves beyond correlation to identify the underlying reasons for attacks, find the root cause of intrusions, evaluate security controls, and predict vulnerabilities. It involves the capture, storage and reuse of knowledge.

Using causal reasoning enables more effective threat detection, risk assessment, and defence strategies. By analysing the direct chain of events the root cause distinguishes if a detected anomaly was caused by a cyberattack or a non-malicious action. By understanding the causal factors of past attacks, future vulnerabilities are revealed.

Causal AI goes beyond pattern recognition to understand why an attack happened. Causal reasoning can help identify potential privacy risks by modelling the chain of events that could lead from a data breach to the exposure of sensitive information. Future cyberattack rates can be forecast based on historical time-series data.

The causal diagram below maps a potential maritime scenario to demonstrate the cause and effect of cyberattacks at sea.

Source: Researchgate[xxxiii]

Understanding how a cyberattack unfolds is key to defending against it. However, ascertaining that an attack is taking place and determining the optimal defence can be difficult. In current cybersecurity systems, information is combined from correlated events that aren’t necessarily causally related, which can lead to inaccuracy.

Causal models capture knowledge from expert chief information security officers that describes the relationships between indicators of anomalous behaviour and root cause. Causal inference determines the signals that could lead to other vulnerabilities. Machine learning models can predict potential vulnerabilities and cyberattacks before they occur by analysing threat patterns. In turn, accurate indexes can be created and used without fear of basis risk (false triggers) in risk management, insurance-linked securities, and parametric insurance. A combined accumulation risk index and zero-day attack index,[xxxiv] can be used to quantify systemic risk, such as correlated vulnerabilities and single points of failure across portfolios, to understand and mitigate accumulation risk.

The accumulation risk index is a unified, explainable risk score that reflects how risk factors aggregate across an organization’s entire attack surface. It continuously measures the build-up of latent vulnerabilities, misconfigurations, exposure gaps, drift, and insider threat indicators that collectively heighten the probability of a breach. Security and risk teams leverage the index to spot emerging accumulation “hot spots.”

The zero-day attack index is a single, dynamically updated score that reflects the real-time risk of undisclosed (zero-day) vulnerabilities being exploited across an enterprise or supply chain. It aggregates multiple causal risk signals, threat feed indicators, network telemetry anomalies, patch management gaps, vendor exposure levels, and dark-web chatter into one interpretable score. By leveraging the expert-defined index methodology, security and risk teams pinpoint true drivers of zero-day risk, anticipate exploit surges, and prioritize real time mitigations, moving from reactive “exploit-detected” to proactive “risk-rising” alerts.

Causal reasoning also allows counterfactual analysis and intervention, which can show how cyberattacks may have been quantifiably worse if circumstances had evolved differently. Due to the dynamic nature of cyber risk, no two events are the same, so counterfactual analysis and intervention can help explore alternative event characteristics, narratives, and losses.

Cloud Security Changes

As cloud computing hides the difficulty of running complex computing infrastructure, it also hides security information. This is where trust problems arise and adversaries leverage AI to augment their operations. Data becomes a new perimeter and everybody an insider threat.

Nation-state and criminal adversaries operationalize and harness AI to scale attacks that were previously out of reach. Meanwhile, the good guys are implementing AI in the cloud, in code generation, and in automation. These functions are accelerating cloud intrusions, making the cloud an organization's greatest enabler and risk simultaneously. This double edge demonstrates a significant shift: With AI the risk is constantly regenerating unlike traditional IT, where once a risk was patched it was fixed for a certain time period.

The cloud involves thousands of constantly changing configurations and services, so it remains a moving target for misconfigurations and evolving attack surfaces. Tools are being developed to address this, such as Charlotte AI,[xxxv] which was launched by Crowdstrike. Charlotte AI uses agentic AI to do the heavy lifting of analyzing and scanning.

The cloud has so many vulnerabilities and alerts that it’s difficult for human security teams to prioritize them and discern between false positives and negatives. The AI defense tool allows Common Vulnerability Scoring System (CVSS) [xxxvi] scores to be prioritized so the most serious vulnerabilities can be identified. Adversaries can make enterprises pay for their AI usage by hijacking cloud accounts, deploying LLMs, leaving enterprises with bills while operating undetected. Central processing units are expensive, and token usage can add up quickly, making these high-value assets direct targets for outside adversaries.

Current Events

GenAI is now a top data-exfiltration channel[xxxvii] that removes data from a network, according to the 2025 Browser Security Report (summarized by The Hacker News).[xxxviii] The Japanese government provided a warning about AI hallucination risk after a wild mushroom was misidentified and caused a heath safety risk.[xxxix] “Adversarial poetry” has emerged as a universal jailbreak style that involves crafting “adversarial poems” —benign-looking, rhyming prompts that encode harmful instructions to reliably jailbreak multiple frontier LLMs.[xl] LegalPwn[xli] is a recent prompt attack method that injects privacy policies and legal documents to bypass LLM safety and security checks.

Anthropic detected the world’s first AI-led hacking campaign[xlii] where Claude’s code was manipulated to carry out 80 percent to 90 percent of a highly sophisticated cyberattack that required little human involvement. The attack aimed to infiltrate government agencies, financial institutions, tech firms, and chemical manufacturing companies, though the operation was only successful in a small number of cases.

AI-assisted hacking poses a serious threat. Modern AI models can write and adapt exploit code, sift through huge volumes of stolen data, and orchestrate tools faster and more cheaply than human teams. They lower the skills barrier for entry and increase the scale of the attack.

The following chart shows some significant events, but the list is not exhaustive. The key takeaway is the increase in credential attacks in 2025 due to prompt injection threats.

| Event | Impact | Resolution |

| Microsoft Tay Chatbot 2016[xlii] | Generated offensive language | Tool disabled |

| Samsung data leak ChatGPT[xliv] 2023 | Sensitive data leaked | Internal enquiry and penalties |

| Kyiv Deepfake Impersonation 2022[xlv] | Impostor alert given | Mitigation against future attacks |

| Ozy Media AI Voice Cloning[xlvi] 2024 | Misled Goldman Sachs | Ozy CEO prosecuted |

| Colonial Pipeline Attack 2021[xlvii] dark web | Disrupted fuel supply in the U.S. | Caused a state of emergency |

| Yumi Brands Ransomware 2023[xlviii] | Stole sensitive corporate data | Investigation and mitigation |

| “ShadowMQ” RCE pattern[xlix] 2025 | Affected code reuse globally | Patch provided |

| ConfusedPiloi[l] 2024 | LLM misinformation – RAG | Data poisoning irreversible |

| Shallowfake[li] Deepfake 2025 | Fake claims, motor damage, and pictures | Combat fraud tactics |

| Oracle Clop Cyberattack 2025 [lii] | Compromised University credentials. | Oracle issued a patch to fix |

| 700Credit Data Breach 2025 [liii] | 5.6 Million Credentials Compromised | Contained / Damage Limitation |

| Doordash Data Breach 2025 [liv] | Third Party Vendor Credentials Breach | Class Action Lawsuit |

| GeminiJack AI Vulnerability 2025 [lv] | Zero Click on GOOGLE share drives | Google provided the patch |

Source: Author

Deepfakes Fraud Detection and Insurance Claims

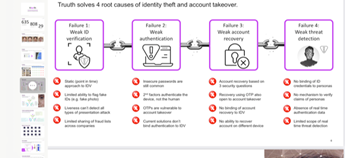

With the proliferation of AI tools and deepfake technologies, organizations are struggling to distinguish real requests from fake ones. A strong security-aware culture helps organizations respond to incidents. The insurance claims process is at high risk of AI deepfake attacks. While digital transformation helps insurers automate claims triage and processing, it also magnifies the attack surface and increases fraud incidents that involve AI-generated claims. Zurich[lvi] and Swiss Re[lvii] have emphasized this serious risk to the industry.

Source: Truuth[lviii]

Fraudsters have long been generating deepfake-altered images—including vehicle damage photos, medical X-rays, property loss reports, and invoices—and falsifying documents. Until recently, human experts could detect most AI-generated images, but multimodal AI models have evolved rapidly and most AI-generated images now aren’t discernible from authentic ones. Visual evidence, such as photos, is commonly trusted by triage systems and human adjudicators, and AI can produce convincing fabrications at scale. It’s therefore necessary that organizations ensure the integrity of documents and images and verify a claimant’s identity.

AI can generate names, faces, voices, and supporting stories to create synthetic customers who pass identity checks. To detect these synthetic profiles, organizations must strengthen their identity verification through biometrics and behavioural analytics. AI can also create fake businesses, which ghost brokers and sham vendors support through the creation of credible-looking websites, business documents, chatbots, and marketing materials.

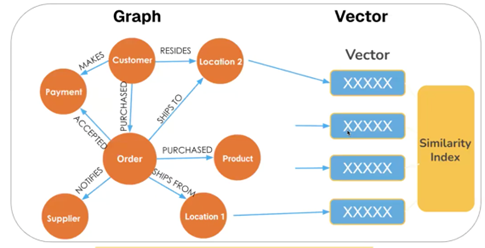

AI is enabling the creation of legitimate looking phishing so causal AI and knowledge graphs are required for behavioral analysis, forming a pattern across time, looking at the relationships of the entities involved with fraud detection in mind. Each graph entity is known as feature and when put together known as a feature set which becomes a more accurate training model for prediction and compliance improving the quality of LLM’s. LLM’s are called generative because it takes statistical patterns to generate new language content based on history but is not easily explained as the embedded vectors cannot be broken down. Knowledge graphs overcome this issue as TigerGraph [lix] illustrates below.

Source: TigerGraph

A complex hybrid knowledge graph is semantically rich to help with reasoning and decision making. The machine learning makes an accurate prediction of the fraud as the graph is adding new features. This full set of training data knows which samples are fraudulent and those non-fraudulent plus the model is fed examples to look at to figure out the pattern of the features that are predictive that are associated with the ones that are fraudulent.

Regulation

Regulation can slow the digital revolution as it lags behind AI’s capabilities. Large AI companies support regulation because it enhances safety and reduces uncertainty. AI is one of the most powerful industrial changes of our time but as yet not fully regulated. AI is a global challenge that requires collaboration, not competition, to establish trustworthy safeguards.

The EU AI Act[lx] has taken many steps to regulate AI, and many nations are developing their own AI positions, but global regulation is necessary. Frameworks for ethical AI are emerging, including the NIST AI Risk Management Framework [lxi] and the EU AI Act, which cover transparency, accountability, fairness, and human oversight. They provide guidelines for the responsible development and deployment of AI, especially cybersecurity, where AI helps automate the monitoring of tokenization systems to ensure compliance with regulations like the General Data Protection Regulation (GDPR), Health Insurance Portability and Accountability Act (HIPAA), and Payment Card Industry Data Security Standard (PCI DSS).

Measuring the return on investment for AI in cybersecurity involves assessing reductions in incident response times, decreased breach costs, improved threat detection rates, and the reallocation of human resources to higher-value tasks. These improvements can be quantified through metrics like mean time to detect (MTTD),[lxii] which is the average time to discover a security incident, and mean time to respond (MTTR),[lxiii] which is the average time to fix the problem from the time of discovery. These basic key performance indicators are necessary for regulatory investigations.

AI’s Impact on Insurance

AI predictive analytics, when used properly, can replace chance with certainty through analysis of behaviours and root causes. This could influence future actuarial and underwriting approaches. AI is changing the way people interact with technology. Regarding cyber risk, AI is involved in two primary types of infection or attack: Firstly AI-powered attacks, where AI is the weapon, and secondly attacks on AI systems, where AI is the target.

The insurance industry must develop the next generation of products that address these new, evolving AI risks. The products should not, however, be an extension of existing cyber policies. Instead, they should adopt the industry’s triangle of change: data-driven insurance-linked securities (ILS), parametric insurance, and embedded insurance.

The pooling of risk in some lines will eventually give way to individual policies based more on certainty and residual risk. Because AI is software technology, it alone is not a driver of change for the industry, but risk and customer perception are also changing. Cyber coverage has been around for a long time, and the industry must now explicitly define new protections for agentic AI, such as adding deepfake risks to policies, building operational resilience, and implementing data-driven underwriting for fortuitous events from machines and robots.

Insurance is a residual risk-transfer mechanism that should be secondary to client mitigation strategies. It can’t be the primary comprehensive cybersecurity solution. Organizations must understand the entire cyber threat landscape.

Corporate risk managers are now fully engaged with AI and tracking changes in exposures, but currently insurance industry underwriting standards outside of audits do not exist. Current claims practices centre around automated decision-making, bias, discrimination, intellectual property, and deepfakes, and most relate to training data, models, and their outputs. Education is required to improve loss and combined ratios.

Asset Tokenisation in the Year 2026 and Beyond

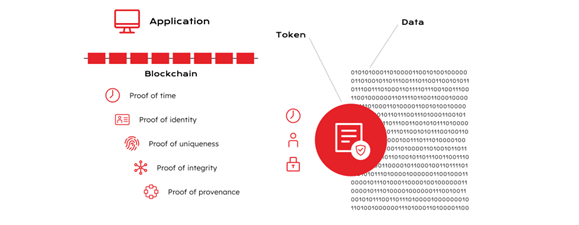

Tokenisation is going mainstream and one of the benefits not generally discussed well is it is more secure to have assets tokenised. However there are gold standards to tokenisation and if the token does not contain these basics by default as shown below, it will be an asset at risk.

Source: Guardtime [lxiv]

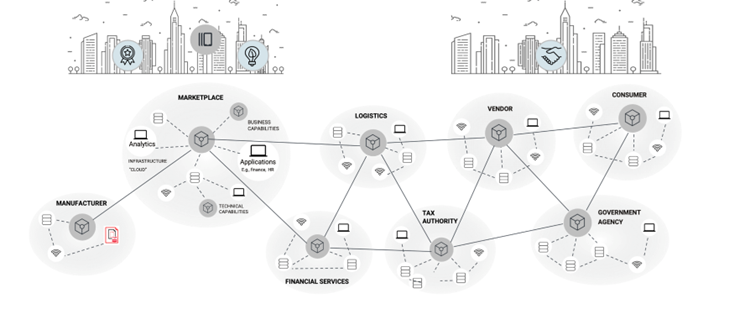

The tokenisation of supply chains (including software) must have interoperability with security so that a token can travel across organizational and network boundaries, be verified and acted upon on at each step without any of the parties in the supply chain needing to change behaviour. These information supply chains are made cryptographically verifiable so anyone in the supply chain can verify the provenance of data without relying on trusted parties. This is all sectors of business as illustrated.

Source: Guardtime

However, we are entering a period where stablecoin tokens will dominate the domestic and cross border payments space eventually giving rise to programmable money where AI is the brain and stablecoins the blood stream of the operation. [lxv]. Cryptocurrency exchanges will handle much higher volumes than present market cap of $3 Trillion [lxvi] and 2030 could be as high as $18 Trillion [lxvii]. Agentic AI will be prevalent in this evolution so cybersecurity has to be foundational and not retrofitted. The techniques mentioned in this paper will be required as gold standard especially around deepfake solutions and causal AI models.

For crypto-exchanges deepfake scams are used to impersonate trusted figures like crypto exchange executives, tricking victims into sending over their money from fake cryptocurrency schemes. GenAI creates convincing content around fraudulent crypto projects and Agentic AI compromised to make subtle changes to wallet addresses and domain names to increase the risk of prompt injection. The crypto industry witnessed $4.6 billion in scam-related losses in 2024, a 24% increase from the previous year.[lxviii]

Crypto exchanges (and their investors) must address these threats now, mitigate and get the necessary insurance cover from the gold standard. This will require strong identity verification, applying detection tools for identifying AI-generated content and a rapid response protocol to detect deepfake attacks and patterns. The platforms deployed must use advanced threat detection, scanning 24X7 every crypto transfer against live threat intelligence to identify malicious behavioural patterns AI adversarial attacks. attempts.

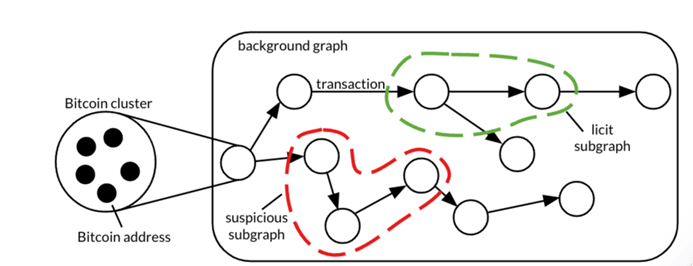

The use of causal AI and knowledge graphs in the crypto domain will be essential in identifying root cause of suspicious anomalies as shown by Eliptic [lxix] below.

Source: Eliptic

Conclusion

AI reshapes the cybersecurity tempo by speeding up defensive work, expanding the attack surface and introducing new enterprise cyber risk pressure points. Organizations that historically spent most of their cybersecurity budget on availability and confidentiality, paying less attention to data integrity (blockchain provenance), but are now adopting agentic AI are going to face some key cybersecurity headwinds. There are many branches of AI, but underlying all of them is the use and analysis of data to mimic human reasoning. Society over the years did not know it wanted electricity, telephones, or blockchain; people just knew they wanted light in buildings, better communication, and more trusted data provenance. These industrial landmarks are now embedded, and AI will follow the same pattern as a pervasive force enabling beneficial solutions at major scale.

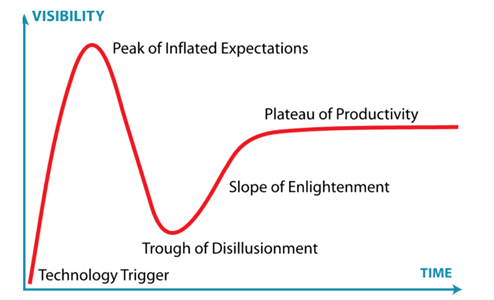

GenAI has created much hype and driven the current high valuation of AI companies. The limitations of GenAI, however, are becoming known, and it’s likely about to enter a trough of disillusionment,[lxx] where initial enthusiasm for new technology wanes as early adoption fails to meet expectations. Cybersecurity is going to be a key risk factor in this scenario. The graph below shows GenAI’s potential path, and transition is likely to occur quickly.

Source: English Wikipedia

AI, however, is here to stay, and it’s not a science that can be reduced to two letters. Machine learning and deep learning have been around a long time, and generative AI LLM’s have valuable uses in workflows and bringing many forms of data together. When human knowledge and causality are added, the real predictive power of AI will be unleashed, and the enlightenment and productivity phases shown will emerge. This will be AI’s real value to society as knowledge is captured, stored and re-used for generations to come.

Source: Vulcain [lxxi]

The United Nations Conference on Trade and Development (UNCTAD) reports that the AI market will reach $4.8 trillion by 2033,[lxii] and this means rapid business transformation with human and machine-driven labour. As the current management layer ages and retires, they will be the last to manage only humans as agentic AI and physical AI (robotics) are added to the workforce. Business decisions will be based on tracking, measurement, and data analysis, enabled by AI. There will be a historical record of an organization’s performance in cybersecurity and cyber risk management, opening the door to demonstrating by causality how humans, systems, and machines manage risk together. AI agents will operate at very high IQ levels with human knowledge, relationship building, judgment, and nuance vital factors. The diagram below summarises AI’s key risks covered in this paper.

Source: Palo Alto

AI agents will eventually obtain softer skills, but building relationships in this new era sits firmly in the hands of tomorrow’s leaders. Cyber incidents require human action as a vital piece of the process, even as the AI learning process matures. There will likely be a period of pushback regarding trusting machines, just as society was initially sceptical of other industrial changes. Trust (or better truth) is the key word, and there is no doubt that AI improves cybersecurity efficiency. Overreliance on AI is a significant concern due to bias, accuracy, and cybersecurity risks prevalent in machine learning training data and agentic AI.

This requires mature regulation, and cybersecurity is still behind the curve with 20th century regulation for 21st century exponential technology. In the United States, regulatory risk is diminishing as the country wants unbounded AI acceleration to show global leadership and remove barriers. Education is necessary to align a future risk-transfer strategy.

Scientific hypothesis can predict an outcome based on existing data, but it frequently misses the intangible factors that truly drive human connection, which is why causal AI is so important. Empathy and relationship building will keep humans in the loop.

The key challenge moving forward will be trust and how AI is governed. Trust is firm reliability in someone or something. Trusting the data or cloud in an enterprise network is useless without the metrics to develop formal situational awareness of how reliable these assets (including agents) really are and what they’re doing with the data, services, and applications they are hosting. Because of the rise in new cyberattacks, we must move from trust to truth, which means undeniable independent proof where liability can be proved forensically in the court of law applying the facets of “trust but verify”.

For too long many organizations have failed to embrace data integrity. Failure to do so now, however, is a criminal offence. Data integrity is mandatory for the agentic AI era. If 82 percent of corporations are implementing agentic AI, those that don’t understand the risk impact, face a future-proofing failure. With embedded blockchain, effective mitigation, trust, and truth, organizations can achieve the benefits of AI. Adversaries now influence AI models as well as manipulating traditional software. They put poisoned data into training pipelines, craft inputs that distort outputs, and clandestinely interfere with automated decisions. One compromised source can influence downstream workflows before anyone notices. Security leaders must tighten validation and monitoring model behaviour over a time series, to ensure teams recognize early signs of integrity failures. As finance shifts to automation powered by tokenised money, stablecoin payments, real time identity and AI native transactions, the underlying security of these rails will be of paramount importance. Businesses must create agent-safe API’s and embed cryptographic trust in the tokens they create and use.

[i] https://modelcontextprotocol.io/docs/getting-started/intro

[ii] https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/

[iii] https://en.wikipedia.org/wiki/OWASP

[iv] https://cybersecurityventures.com/cyberwarfare-report-intrusion/

[v] https://www.biometricupdate.com/202406/deloitte-predicts-losses-of-up-to-40b-from-generative-ai-powered-fraud

[vi] https://www.gartner.com/en/newsroom/press-releases/2025-06-25-gartner-predicts-over-40-percent-of-agentic-ai-projects-will-be-canceled-by-end-of-2027

[vii] https://www.realitydefender.com/insights/agentic-ai-deepfake-fraud

[viii] https://www.statista.com/chart/28878/expected-cost-of-cybercrime-until-2027/

[ix] https://www.radware.com/

[x] https://www.linkedin.com/pulse/model-poisoning-threat-implications-fraud-credit-risk-sharad-gupta/

[xi] https://www.fortinet.com/resources/cyberglossary/cia-triad

[xii] https://guardtime.com/

[xiii] https://en.wikipedia.org/wiki/Infostealer

[xiv] https://hub-cpl.thalesgroup.com/iam-devices-lp/fido-token?utm_source=google&utm_medium=cpc&utm_campaign=en_glob_iam_hw&utm_content=token&utm_term=fido%20token&utm_source=google&utm_medium=cpc&utm_campaign=en_glob_iam_hw&utm_content=token&utm_term=fido%20token&gad_source=1&gad_campaignid=22586303919&gbraid=0AAAAAD_tGURNp8RIrypFj120eQGvDS9XR&gclid=CjwKCAiAraXJBhBJEiwAjz7MZXyVftKz4oLDQonT9HLLIqHkBdWFOjtMz7nnoQHmr4hXqagrdbcRvxoCO4oQAvD_BwE

[xv] https://nhimg.org/wp-content/uploads/2025/09/SailPoint-The-Rising-Risk-of-AI-Agents-Expanding-the-Attack-Surface-report-SP2648-.pdf

[xvi] https://blogs.nvidia.com/blog/ai-tokens-explained/

[xvii] https://cocolevio.ai/

[xviii] https://thehackernews.com/2025/06/new-tokenbreak-attack-bypasses-ai.html

[xix] https://hiddenlayer.com/

[xx] https://github.com/

[xxi] https://www.thalesgroup.com/en/news-centre/press-releases/artificial-intelligence-fuels-rise-hard-detect-bots-now-make-more-half

[xxii] https://en.wikipedia.org/wiki/Microsoft_Copilot

[xxiii] https://www.anthropic.com/news/model-context-protocol

[xxiv] https://seekingalpha.com/news/4401815-openai-launches-new-ai-agent-deep-research?source=acquisition_campaign_google&campaign_id=21672206354&internal_promotion=true&utm_source=google&utm_medium=cpc&utm_campaign=21672206354&adgroup_id=184855029974&utm_content=776356116846&ad_id=776356116846&keyword=&matchtype=&device=c&placement=&network=g&targetid=dsa-1463805041937&utm_term=184855029974%5Edsa-1463805041937%5E%5E776356116846%5E%5E%5Eg&gad_source=1&gad_campaignid=21672206354&gbraid=0AAAAACup1Pyk56_ysXtSIkF__eA0Ixfub&gclid=Cj0KCQiA0KrJBhCOARIsAGIy9wCiutc-KtZvrl7winjEDBbcHQ39J-hewaMccFMMIjZRNoZMiHrikeIaAmRWEALw_wcB

[xxv] https://thehackernews.com/2025/11/researchers-find-chatgpt.html

[xxvi] https://www.radware.com/security/threat-advisories-and-attack-reports/shadowleak/

[xxvii] https://arxiv.org/abs/2509.10540

[xxviii] https://zenity.io/research/agentflayer-vulnerabilities

[xxix] https://en.wikipedia.org/wiki/Explainable_artificial_intelligence

[xxx] https://www.thalesgroup.com/en

[xxxi] https://csrc.nist.gov/pubs/ai/100/2/e2023/final

[xxxii] https://en.wikipedia.org/wiki/Computational_linguistics

[xxxiii] https://www.researchgate.net/

[xxxiv] https://vulcain.ai/

[xxxv] https://www.crowdstrike.com/en-us/platform/charlotte-ai/

[xxxvi] https://www.first.org/cvss/

[xxxvii] https://thehackernews.com/2025/10/new-research-ai-is-already-1-data.html

[xxxviii] https://thehackernews.com/2025/11/new-browser-security-report-reveals.html

[xxxix] https://www.asahi.com/ajw/articles/16157927

[xl] https://arxiv.org/pdf/2511.15304

[xli] https://bdtechtalks.substack.com/p/legalpwn-the-prompt-injection-attack

[xlii] https://www.anthropic.com/news/disrupting-AI-espionage

[xliii] https://en.wikipedia.org/wiki/Tay_(chatbot)

[xliv] https://humanfirewall.io/case-study-on-samsungs-chatgpt-incident/

[xlv] https://www.theguardian.com/world/2022/jun/25/european-leaders-deepfake-video-calls-mayor-of-kyiv-vitali-klitschko

[xlvi] https://www.courthousenews.com/ozy-media-exec-says-he-impersonated-media-exec-to-scam-goldman-sachs/

[xlvii] https://en.wikipedia.org/wiki/Colonial_Pipeline_ransomware_attack

[xlviii] https://www.twingate.com/blog/tips/Yum-data-breach

[xlix] https://www.oligo.security/blog/shadowmq-how-code-reuse-spread-critical-vulnerabilities-across-the-ai-ecosystem

[l] https://www.secureworld.io/industry-news/confusedpilot-exploits-ai-tools-cloud-security-flaws

[li] https://www.insurancenews.com.au/daily/shallowfake-images-fuel-motor-fraud?utm_source=chatgpt.com

[lii] https://cloud.google.com/blog/topics/threat-intelligence/oracle-ebusiness-suite-zero-day-exploitation

[liii] https://www.securityweek.com/700credit-data-breach-impacts-5-8-million-individuals/

[liv] https://www.bitdefender.com/en-gb/blog/hotforsecurity/doordash-says-data-breach-at-third-party-vendor-exposes-personal-data-of-customers-and-employees

[lv] https://noma.security/blog/geminijack-google-gemini-zero-click-vulnerability/

[lvi] https://www.zurich.com/media/magazine/2022/three-reasons-to-take-note-of-the-sinister-rise-of-deepfakes?utm_source=chatgpt.com

[lvii] https://www.swissre.com/institute/research/sonar/sonar2025/how-deepfakes-disinformation-ai-amplify-insurance-fraud.html?utm_source=chatgpt.com

[lviii] https://www.truuth.id/

[lix] https://www.tigergraph.com/

[lx] https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

[lxi] https://www.nist.gov/itl/ai-risk-management-framework

[lxii] https://www.splunk.com/en_us/blog/learn/mean-time-to-detect-mttd.html

[lxiii] https://www.lumificyber.com/fundamentals/what-is-mean-time-to-respond-mttr/

[lxiv] https://guardtime.com/

[lxv] https://paymentscmi.com/insights/agentic-ai-stablecoins-future-finance/

[lxvi] https://www.forbes.com/digital-assets/crypto-prices/

[lxvii] https://www.nadcab.com/blog/crypto-token-market-and-global-forecast

[lxviii] https://www.reuters.com/technology/crypto-scams-likely-set-new-record-2024-helped-by-ai-chainalysis-says-2025-02-14/

[lxix] https://www.elliptic.co/

[lxx] https://fastforward.boldstart.vc/ai-is-falling-into-trough-of-disillusionment/

[lxxi] https://vulcain.ai/

[lxii] https://unctad.org/news/ai-market-projected-hit-48-trillion-2033-emerging-dominant-frontier-technology

01.2026